Building a Large Multilingual Dataset

At the time this article is being written, BigScience has recently started training. The open science project by HuggingFace consists of a Large Language Model (LLM) that will be trained on approximately 350 billion tokens. This means roughly 1.5 TB of data in 46 different languages. To give you an idea of how much textual data this number represents, if we were to print all this text on A4 paper, we would have 5 mount Everests worth of data.

As I wrote in my previous article on the subject, this represents an incredible step forward in terms of multilingualism and diversity in the field. But, one can imagine, building such a large and diverse dataset is not an easy task. So let’s discover the process for building the multilingual dataset for BigScience.

The Data Work Necessary for Building a Multilingual Dataset for BigScience

When it comes to building such a large dataset in so many languages, there are several aspects to consider. The preparatory work is an important task in and of itself.

Firstly, it is essential to note that the data collected to create the dataset has intrinsic values and characteristics independent of the scope of the project. This is true for any Machine Learning project, but it is important to keep it in mind. Furthermore, there are model-specific requirements to meet when collecting the data. With these two essential aspects in mind, we can now have a look at how one would approach building a multilingual dataset.

The Goals that Guided the Dataset Curation

- Retrieve diverse content genres and geographical variety of sources.

- Prioritize human participation and expertise in the curation process to improve data quality and achieve curation choices accountability.

- Create a corpus of sufficient size to allow researchers to fully utilize the computing budget.

- To improve data governance through carefully selecting, processing, and documenting data sources.

The Challenges of Building a Large Multilingual Dataset

Let’s first look at the significant challenges such a dataset requires.

First, there is a trade-off to consider between the characteristics of the data we may prefer, and the availability of a large enough amount of data to train such a large language model. So balancing the different aspects of data work becomes even harder if we consider this trade-off in the equation.

There is then of course extensive legal work that needs to be done. The legal group for BigScience had to develop a comprehensive legal playbook to give the ML engineers a better understanding of their work. The playbook covers nine different jurisdictions, including different privacy and data protection laws.

This aspect is fundamental. In fact, a second group was dedicated exclusively to the identification of strategies to mitigate privacy risks.

Of course, the pivotal challenge is data sourcing and collection. For BigScience, international hackathons were organized to leverage 246 language resources and shortlist 605 websites.

Finally, data management is fundamental for the successful completion of the project. For BigScience, a dedicated group of researchers developed guidelines for international data governance, including legal and technical tools needed.

According to the HuggingFace BigScience blog, the huge amount of textual data involved posed other challenges as well; evaluating how automated changes in data curation affected results becomes really hard. At the same time, manually curating the dataset is impossible. To find a balance between automation and manually curating the data, different solution were adopted:

- engineers created tools to curate the data and keep a hand over automation

- languages to be included were selected based on the availability of fluent speakers. This means that researchers choose to concentrate their efforts on fewer languages, to make sure they had the necessary resources and time.

How the Data was Sourced and Collected

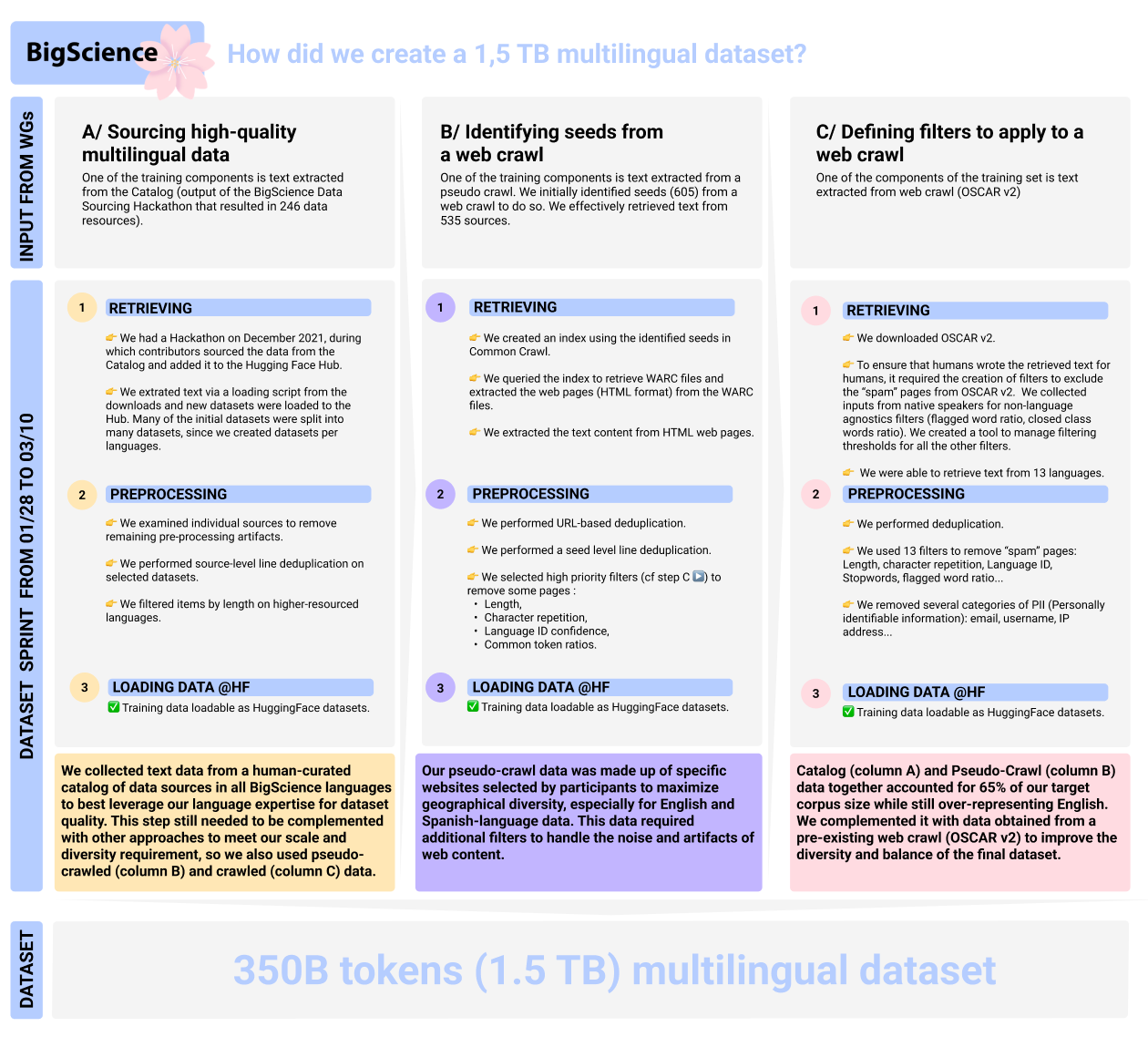

A dedicated sprint with +15 researchers began in January 2022 to gather the data, analyze it, and generate the training dataset. The information was gathered from three different sources:

- Many primary data sources and existing NLP datasets that participants desired to incorporate in the training corpus were included in the Data Sourcing Catalog.

- Additional targeted websites gathered through a pseudo crawl by members of the Data Sourcing group as indicative of a range of geographical linguistic variants.

- Data in some target languages was extracted from the OSCAR v2 web crawl dataset based on several language-specific data quality measures.

- Retrieval of the source data.

- Application of different pre-processing procedures, including visualization and data quality indicators.

- extraction and pre-processing of the text that would be used to train the model as ready-to-load datasets in the Hugging Face Hub before the training began.

Filtering and Establishing Data Quality

In the researchers’ words, the main goal to keep in mind during data filtering was to select text humans wrote for humans.

They found the best way to steer their curation efforts in this direction was to generate aggregated statistics for each data source, such as:

- analyzing the ratio of punctuation or special characters (an unusually high ration of punctuation usually indicates errors in the retrieval process, such as HTML code leaking through the text).

- defining lists of special class words; in each natural language document, we expect specified groups of frequent tokenswords (known as closed class words) to exist at a specific frequency. Tables, lists, and other papers with unusual layout tended to be associated with writings having a low common tokenclosed class word ratio. Initialized using part-of-speech data and checked by proficient language speakers, the appropriate lists of common tokenclosed class words were created.

- identifying a language identification confidence score, a metric that helps classify a text as pertaining or not to a language. Low language identification confidence scores usually point to errors in the retrieval or pre-processing steps.

- using similar indicators such as sequence length, perplexity, word repetition statistics, and flagged words in various languages.

Preparing the Data – Catalog and Web Crawl

The Catalog and Pseudo-Crawl were pre-processed similarly as they had all been manually selected by participants, with a combination of manual and automatic curation. The following steps were implemented:

- document-level and page-level deduplication;

- identification of data sources that needed further pre-processing through the above-mentioned filters;

- definition of pre-processing rules to make the text more human-readable (e.g. eliminating often occurring lines);

- filtering out of text items that were considered too short for high-resource languages. For low resource languages, shorter text extracts were concatenated when applicable;

- filtering out data based on indicators such as language ID confidence, character repetitions, length, and closed class word ratios.

OSCARv2

The OSCARv2 dataset was used as a base. Data in 13 languages was extracted from OSCARv2. Languages were chosen based on confidence in the precision of the language identification system. Keeping in mind the goal of retrieving pages written by humans for humans, researchers also defined a series of page-level metrics to define whether pages met or not the goal. Some of these metrics were implemented across the board, others were language specifics (e.g. closed class word ratio and flagged words). Parameters for these metrics were provided by fluent speakers.

Filtering and deduplication were applied next.

Personal information was also redacted from the dataset.

Next Steps and Conclusion

Researchers specified that “The next few months will be focused on leveraging this work to incrementally release either an extensive visualization or the full content of the sources according to the recommendations of our Data Governance work“.

Some resources are already ready to be released, others will be further processed before release, and others will not be fully released, either for privacy or licensing reasons.

So there you have it! This is how one tackles a challenge such as building a multilingual dataset for BigScience. I hope you had fun peeking through the curtain to have a glimpse of such a fascinating process! We will probably have updates to share on the project soon, so stay tuned!