Here is the deal. We are all pretty stoked about the huge leaps forward we as a community are making with Artificial Intelligence. Especially in NLP, with all the exciting models that seem to come out every other day. But – there is always a “but” – it is easy to forget about the other side of the coin – the carbon footprint of training large models such as Transformers. The Environmental Impact of Artificial Intelligence and Machine Learning is particularly disturbing if we think about the current trend in the industry, where larger and larger models are being trained and used more and more often.

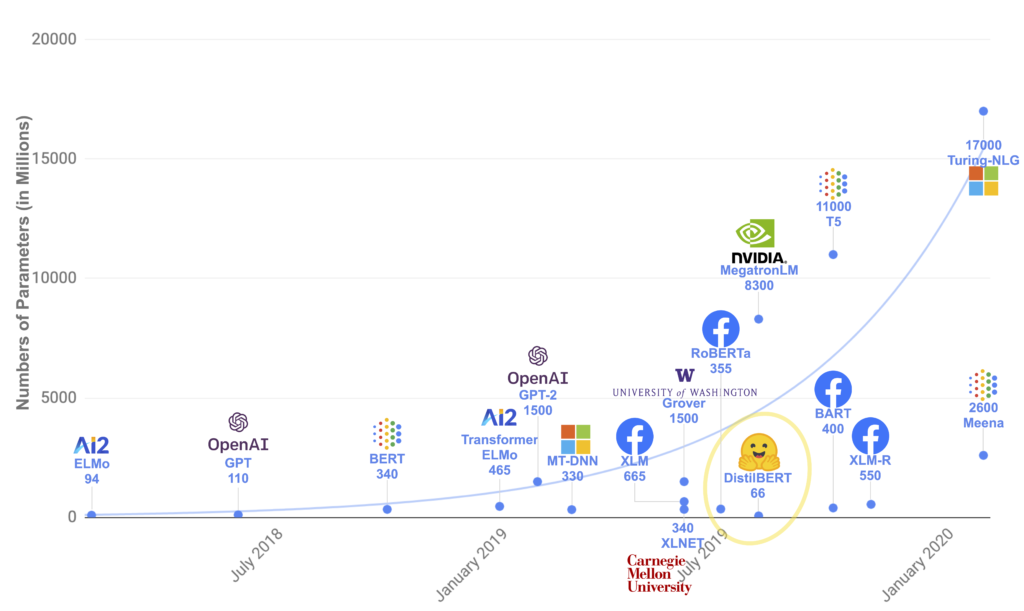

As you can see from the graph above (Fig 1), to improve performances, models’ size and training data keep increasing – which translates to a significant carbon footprint due to the significant computational power needed. And the amount of power multiplies if you consider the fact that these models need to be trained and re-trained multiple times to achieve best results.

I hadn’t thought about how bad for the environment training large Machine Learning models is, until I read about it in one piece of HuggingFace documentation [1]. Since I experiment a lot with transformers, I wanted to know the environmental impact of my work. Even if I mostly run smaller experiments, I still use large models from open source libraries, and I wanted at least to be aware of the consequences. So naturally, I started looking for articles on the subject.

Finding Sources about AI Environmental Impact

If you do a simple Google search of “AI environmental impact” or “AI carbon footprint” you may run across clickbait-ish articles with grabby titles and quotes such as “Creating an AI can be five times worse for the planet than a car” [2], or “AI’s carbon footprint will be an issue for enterprises” [3] which make the future of AI seem pretty bleak if not catastrophic.

While you can find many opinion pieces and featured articles on the same lines, I wanted to read scientific papers on the subject. So I started reading the original paper [4] cited in the first article I mentioned.

Scientific Papers about The Environmental Impact of Artificial Intelligence

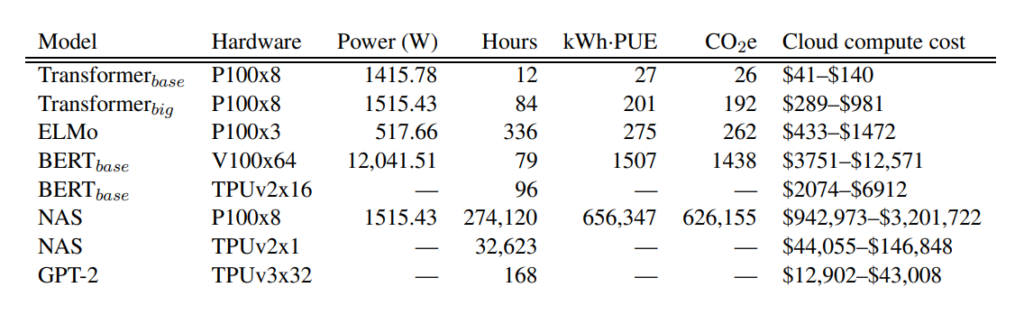

In the paper, published in 2019, the authors quantify “the approximate financial and environmental costs” of training large language models, and even provide recommendations on how to reduce carbon emissions when training and experimenting with these power-hungry models. The methodology they use is pretty straightforward: they selected a series of large state-of-the-art NLP models that are widely used in the industry, and started training them.

They used the default settings provided by the researchers that developed the models in the first place, and monitored the GPU and CPU power consumption during training. They trained each model for a maximum of one day on a single NVIDIA Titan X GPU (except for ELMo which was trained on 3 NVIDIA GTX 1080 Ti GPUs). Furthermore, they then estimated the time necessary to train the models to completion using information reported in the original papers. Finally, they calculated the total power consumption and thus the associated CO2 emissions.

You can see the results of their experiments below.

Another article, titled “The Ecological Impact of High-performance Computing in Astrophysics” [5], published in 2020, seemed to raise similar concerns for scripting operation and inefficient computational paradigms common in astrophysics research. The authors even compared different programming languages in terms of their power efficiency and found Python, which is widely used in scientific research, to be the least efficient.

If we were to stop here and just look at these results, it would indeed seem that those flashy articles were right, and that AI (and in particular NLP) experiments really do have disastrous consequences for the environment. So that’s it? Are we really destroying the environment in our scientific quest for knowledge, with all our large models, fine-tuning operations, and with our constant use of Python for scientific scripting?

Well, not really.

The Complex Truth about Artificial Intelligence and the Environment

The truth is, as always, the situation is far more complex. If computing, especially for scientific research, is in fact a power-hungry endeavor (and this seems to be particularly true for NLP research), there are other aspects we need to consider.

First, the scientific community is aware, and is trying to find ways to improve the efficiency of scientific research. In [2], the authors themselves provide several ways by which each researcher may reduce the carbon footprint of their experiment. Other experiments have already been conducted to find ways to optimize energy consumption in training. In [6], for example, the authors propose a new way to share parameters within different layers of a network. The goal, in this case, would be to generate weights within a given budget for a neural network.

Furthermore, a new automated AI system built by MIT researchers for training and running some neural networks has been developed. The researchers’ technique, dubbed a once-for-all network, trains a single big neural network made up of numerous pre-trained subnetworks of various sizes that can be customized to multiple “hardware platforms without retraining” [7]. This cuts the amount of energy needed to train each specific neural network for new platforms, which can contain billions of internet of things (IoT) devices, in half. They estimated that using the technique to train a computer vision model required around 1/1,300 the carbon emissions of today’s state-of-the-art neural network search approaches, while lowering inference time by 1.5-2.6 times [7].

Even Google has put their Deep Mind technology to good use, exploiting machine learning to “consume less energy and help address one of the biggest challenges of all — climate change” [8]. DeepMind is now being used to improve the energy efficiency of the company’s data centers, cutting down energy consumption for cooling operation by 40%, which resulted in an overall 15% reduction in Power Usage Effectiveness. Improving energy efficiency for Google’s datacenters cooling operation will also “help other companies who run on Google’s cloud to improve their own energy efficiency” [8].

Apple and Oranges, Cars and AI models

But that’s not all.

If it is tempting to say that large models can produce as much CO2 as one car in its lifetime, we must also underline that the comparison, while catchy, is somewhat faulty. For example, there are millions of cars circulating in the US alone, whereas there are significantly less ML models being trained. Furthermore, if the authors warn us about the carbon footprint of GPUs and CPUs, at the time of the study data about the energy efficiency of TPUs, widely used in NLP language modelling, was not available yet.

Nonetheless, Google has published an interesting read [9] about TPUs’ performances. In the article, TPUs are shown to achieve far higher energy efficiency than traditional processors, with performances being between 30 and 80 times better than conventional units. Therefore, the use of TPUs, which have been specifically designed to enhance AI modelling, decreases the carbon footprint of training large models. Google, as well as the other FAANG companies, are well aware of the computational costs of Machine Learning, and even if they might only care about the monetary and time-saving advantages of optimization, they are still working to improve computational efficiency. In the same article, Google acknowledges that traditional processing methods and architectures are inadequate and require significantly more resources, and, therefore, energy consumption remains a concern. Here [10] you will find more information about Google’s efforts towards improved efficiency.

The importance of Energy Sources

Another point to consider is that in [4] the authors only take into account the average US power source breakdown when calculating the CO2 emissions of such models. Probably due to a lack of data on the subject, the authors did not consider the varying nature of energy sources utilized for Machine Learning. It appears, in fact, that large groups and corporations do not usually rely on the same power sources as smaller actors.

In fact, many big tech companies have started to make the shift towards renewable energy sources to power their massive data centers and infrastructure. On this page [11] you will find more information about Google’s efforts to achieve the zero emissions goal by 2030. Facebook has pledged to reach the same goal for their entire value chain by the same year [12].

According to the same article [12] by UtilityDive, more tech corporations, such as AT&T and Microsoft, have begun to announce substantial numbers of partnerships aimed at powering their data centers sustainably, and Amazon was the largest corporate buyer of renewable energy in 2020, with 3.16 GW. According to Kara Hurst, Amazon’s vice president of sustainability, the company is on target to achieve 100 percent sustainable energy by 2025. Amazon also signed a 380 MW contract for offshore wind in Europe in February, marking the first corporate procurement of the resource by a business based in the United States.

The Push for Renewable Energies in AI

As more organizations strive toward their sustainability goals, corporate interest in acquiring renewables and storage is projected to grow.

The hope is that the giants of Silicon Valley will pave the way, either by being a good example or though their lobbying resources, leading to an environmental revolution with ripple effects in other industries as well. Tech companies have enormous drive, both when it comes to setting trends and lobbying, so thinking they might for once use their powers for good might be wishful thinking, but in this case it doesn’t appear to be too far from reality.

Finally, when it comes to AI environmental impact, it is important to note that AI application are actually being used to reduce carbon emissions and/or optimize energy consumption in various sectors. I will not go into too many details, since applications of AI for Good are numerous and cover a wide range of sectors, but it is worth noting that these project do exist, and they are operational. If you want to know more about this subject, read my article on AI for Good and how it is helping achieve the United Nations’s sustainable development goals.

So, it seems, the issue is far more complex than one might expect, and there are many factors at play. Still, what can we do to improve the environmental impact of our research?

What Can We do?

First, I believe it is important to at least be aware. It is easy to forget that our actions as researchers, data scientists and programmers can have an impact on the world outside our organization as well. Staying informed on the subject and actively researching ways to optimize one’s own energy efficiency is definitely a first step in the right direction.

The authors in [13] also provide valuable suggestions to improve energy efficiency when training Machine Learning models, namely:

- Researchers should quantify energy usage and CO2e, or at least generate a rough estimate using a tool like ML Emissions Calculator [14]. They should then the data to make the carbon costs of training clear;

- Efficiency, in addition to accuracy and associated criteria, should be a condition for publishing ML research on computationally intensive models. The ultimate goal should be to encourage progress across the board, and push researchers to reduce the energy demand of their experiments;

- Even if we could reduce CO2e emissions in cloud datacenters to zero, cutting training time is critical because “time is money,” and cheaper training allows more people to participate. More researchers should disclose the number of accelerators and the time it takes to train computationally intensive models in order to spur progress in lowering training costs.

Furthermore, the researchers share opportunities to improve energy efficiency when training ML models.

The Underwhelming Truth

So there you have it: if Machine Learning, and NLP in particular, does have a significant impact on the environment in terms of energy demand, and consequently, CO2 and CO2 equivalent emissions*, it is also true that there are mitigating factors at play, and large players and researchers in the industry are aware of the energy cost of Machine Learning. What’s important is to be aware of these downsides of ML, and try to do our part in reducing the industry’s impact on the environment, and strive collectively for a greener, more accessible AI.

*equivalent emissions account not only for carbon dioxide but for all the other greenhouse gases as well.

More Articles you can Read

If you want to read more on the subject, here are six articles worth reading, besides the ones already cited above:

- “The most sustainable energy is the energy you don’t use,” 2018. EKOenergy. URL https://www.ekoenergy.org/the-most-sustainable-energy-is-the-energy-you-dont-use/ (accessed 1.18.22).

- Etzioni, R.S., Jesse Dodge, Noah A. Smith, Oren, n.d. Green AI [WWW Document]. URL https://cacm.acm.org/magazines/2020/12/248800-green-ai/fulltext (accessed 1.18.22).

- Henderson, P., Hu, J., Romoff, J., Brunskill, E., Jurafsky, D., Pineau, J., 2020. Towards the Systematic Reporting of the Energy and Carbon Footprints of Machine Learning. Journal of Machine Learning Research 21, 1–43. URL https://jmlr.org/papers/volume21/20-312/20-312.pdf (accessed 1.18.22).

- Lannelongue, L., Grealey, J., Inouye, M., 2020. Green Algorithms: Quantifying the carbon footprint of computation. arXiv:2007.07610 [cs]. URL https://arxiv.org/pdf/2007.07610.pdf (accessed 1.18.22).

- Gupta, U., Kim, Y.G., Lee, S., Tse, J., Lee, H.-H.S., Wei, G.-Y., Brooks, D., Wu, C.-J., 2020. Chasing Carbon: The Elusive Environmental Footprint of Computing. arXiv:2011.02839 [cs]. (accessed 1.18.22).

- “A.I.’s carbon footprint is big, but can be easily reduced say Google researchers,” Fortune. URL https://fortune.com/2021/04/21/ai-carbon-footprint-reduce-environmental-impact-of-tech-google-research-study/ (accessed Jan. 11, 2022).

Sources

[1] “Transformer models – Hugging Face Course.” https://huggingface.co/course/chapter1/4 (accessed Jan. 18, 2022).

[2] “Creating an AI can be five times worse for the planet than a car | New Scientist.” https://www.newscientist.com/article/2205779-creating-an-ai-can-be-five-times-worse-for-the-planet-than-a-car/ (accessed Jan. 18, 2022).

[3] “AI’s carbon footprint will be an issue for enterprises,” SearchCIO. https://searchcio.techtarget.com/feature/AIs-carbon-footprint-will-be-an-issue-for-enterprises (accessed Jan. 11, 2022).

[4] E. Strubell, A. Ganesh, and A. McCallum, “Energy and Policy Considerations for Deep Learning in NLP,” arXiv:1906.02243 [cs], Jun. 2019, Accessed: Jan. 11, 2022. [Online]. Available: http://arxiv.org/abs/1906.02243

[5] S. P. Zwart, “The Ecological Impact of High-performance Computing in Astrophysics,” Nat Astron, vol. 4, no. 9, pp. 819–822, Sep. 2020, doi: 10.1038/s41550-020-1208-y.

[6] B. A. Plummer, N. Dryden, J. Frost, T. Hoefler, and K. Saenko, “Neural Parameter Allocation Search,” arXiv:2006.10598 [cs, stat], Apr. 2021, Accessed: Jan. 12, 2022. [Online]. Available: http://arxiv.org/abs/2006.10598

[7] “Reducing the carbon footprint of artificial intelligence,” MIT News | Massachusetts Institute of Technology. https://news.mit.edu/2020/artificial-intelligence-ai-carbon-footprint-0423 (accessed Jan. 12, 2022).

[8] “DeepMind AI reduces energy used for cooling Google data centers by 40%,” Google, Jul. 20, 2016. https://blog.google/outreach-initiatives/environment/deepmind-ai-reduces-energy-used-for/ (accessed Jan. 12, 2022).

[9] “Quantifying the performance of the TPU, our first machine learning chip,” Google Cloud Blog. https://cloud.google.com/blog/products/gcp/quantifying-the-performance-of-the-tpu-our-first-machine-learning-chip/ (accessed Jan. 12, 2022).

[10] “Efficienza – Data center – Google,” Google Data Centers. https://www.google.com/intl/it/about/datacenters/efficiency/ (accessed Jan. 12, 2022).

[11] “Energia pulita 24 ore su 24, 7 giorni su 7 – Data Center – Google,” Google Data Centers. https://www.google.com/intl/it/about/datacenters/cleanenergy/ (accessed Jan. 12, 2022).

[12] “Facebook meets 100% renewable energy goal with over 6 GW of wind, solar, 720 MW of storage,” Utility Dive. https://www.utilitydive.com/news/facebook-meets-100-renewable-energy-goal-with-over-6-gw-of-wind-solar/598453/ (accessed Jan. 12, 2022).

[13] D. Patterson et al., “Carbon Emissions and Large Neural Network Training,” arXiv:2104.10350 [cs], Apr. 2021, Accessed: Jan. 11, 2022. [Online]. Available: http://arxiv.org/abs/2104.10350

[14] A. Lacoste, A. Luccioni, V. Schmidt, and T. Dandres, “Quantifying the Carbon Emissions of Machine Learning,” arXiv:1910.09700 [cs], Nov. 2019, Accessed: Jan. 18, 2022. [Online]. Available: http://arxiv.org/abs/1910.09700